About Me

I’m a Robotics Engineer specializing in SLAM, navigation, computer vision, and reinforcement learning. With over 3 years of experience, I’ve contributed to the development of diverse autonomous systems and robotic platforms.

I am currently pursuing a Master’s degree in Robotics at Purdue University, with a focus on multi-modal models, reinforcement learning, and classical control theory to drive more efficient and intelligent robotic operations.

Education

Purdue University, West Lafayette

M.S. in Robotics • 2025–Present

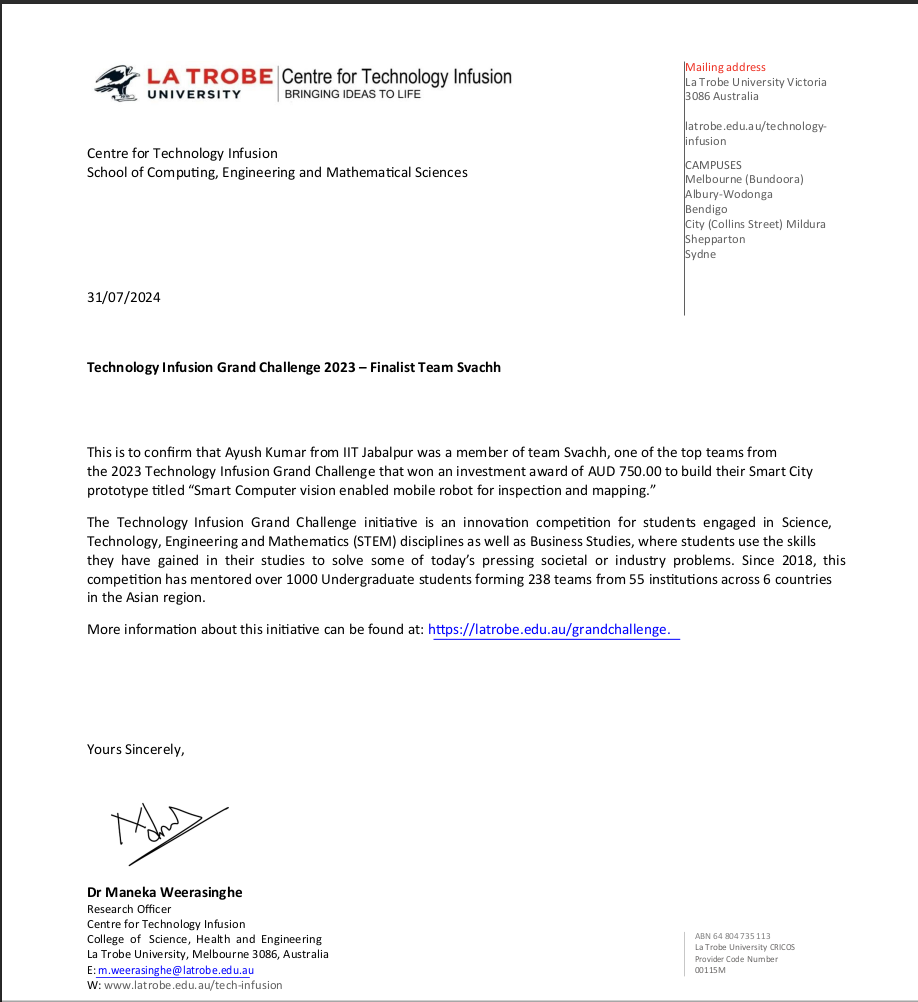

IIIT Jabalpur

BTech in ECE • 2019–2023