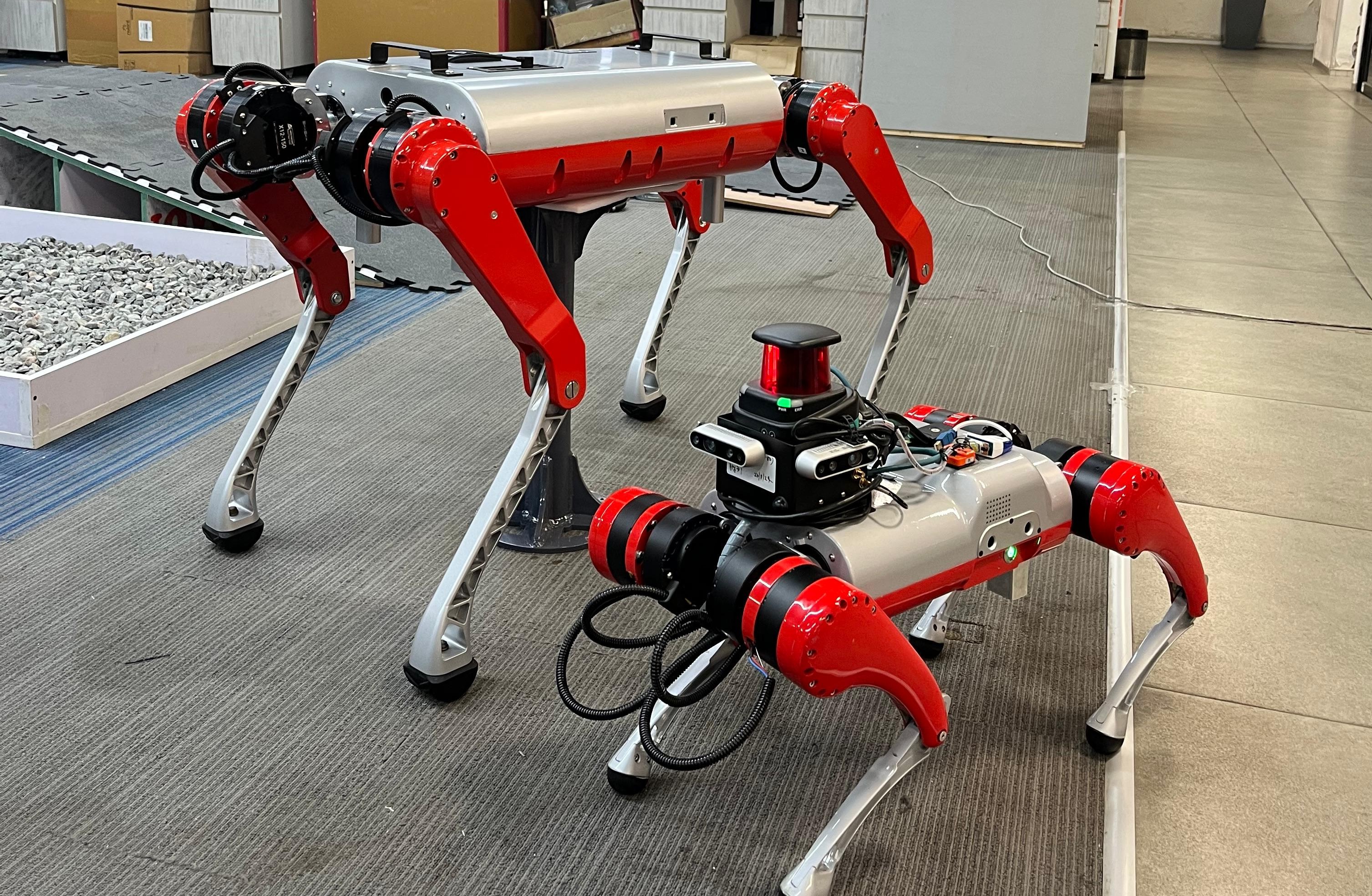

Addverb

I joined Addverb in 2023 as a Robotics Intern, where I initially focused on firmware development. Over time, I transitioned to working on the application layer of the robots, contributing primarily to Navigation, SLAM, and Computer Vision modules.