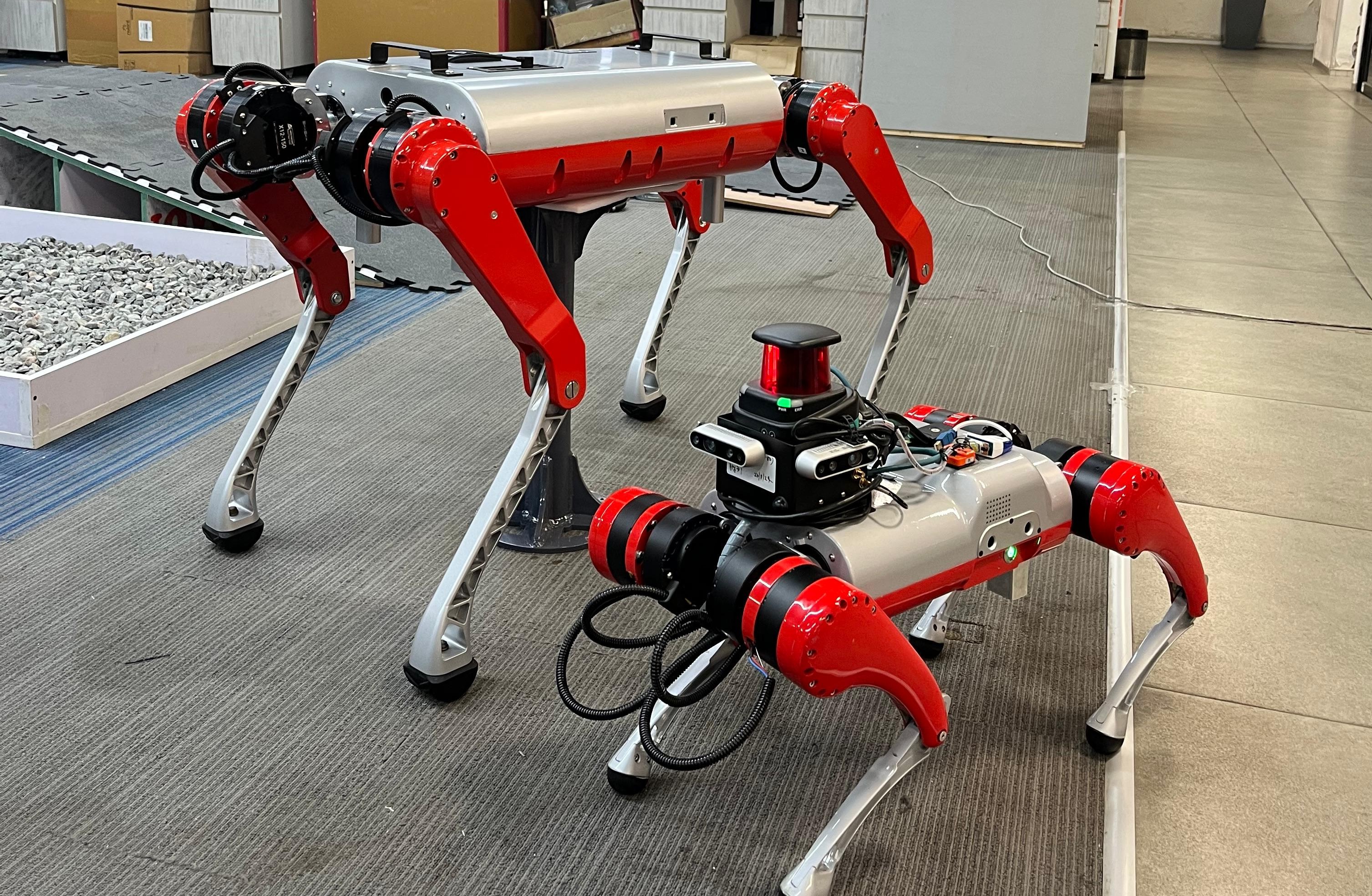

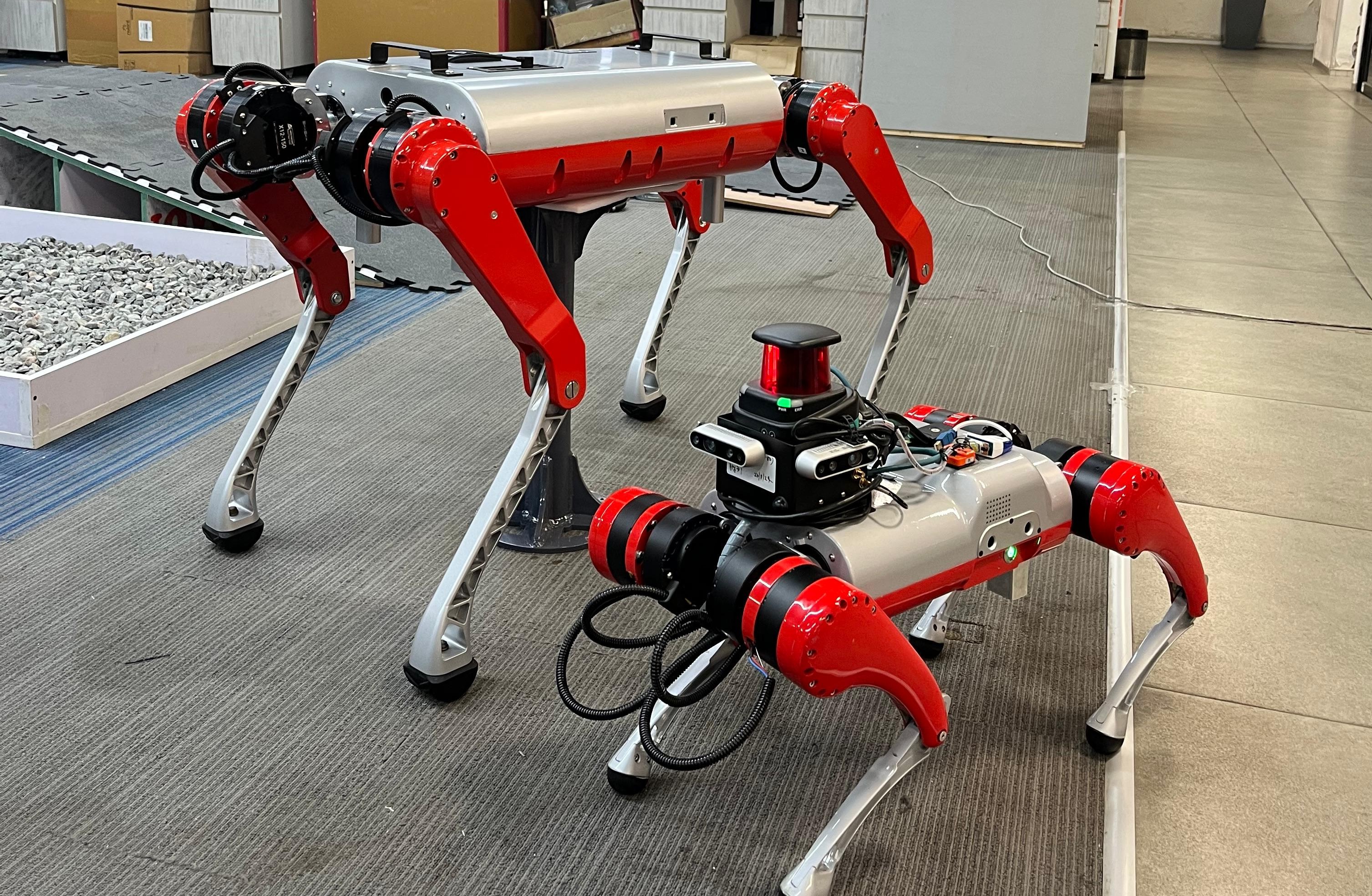

Autonomy Stack for quadruped - Addverb

Developed the autonomy stack—including SLAM and navigation—for Addverb’s in-house quadruped, TRAKR, enabling efficient navigation in unknown environments with minimal human intervention.

Developed the autonomy stack—including SLAM and navigation—for Addverb’s in-house quadruped, TRAKR, enabling efficient navigation in unknown environments with minimal human intervention.

Developing autonomy pipelines for wheeled robots is relatively easier since they have a reliable source of odometry. However, this is not the case for legged robots such as quadrupeds and humanoids, where obtaining accurate and consistent odometry remains a challenge.

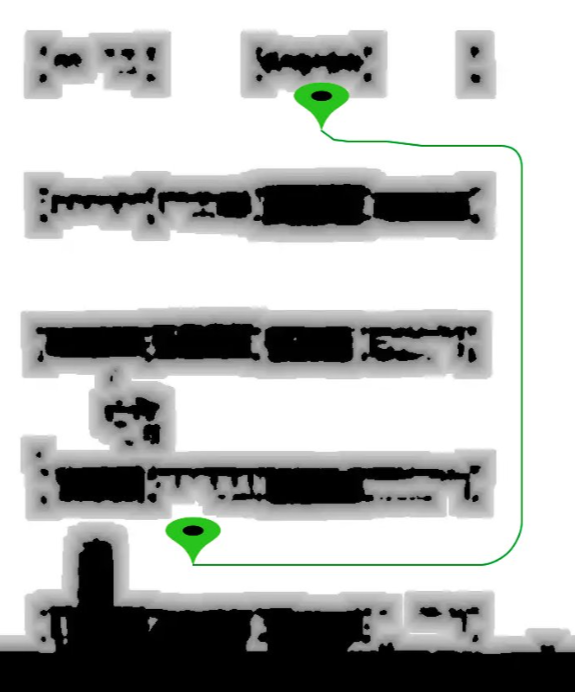

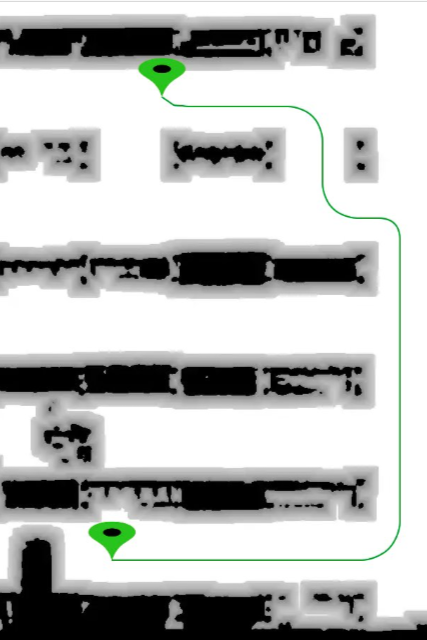

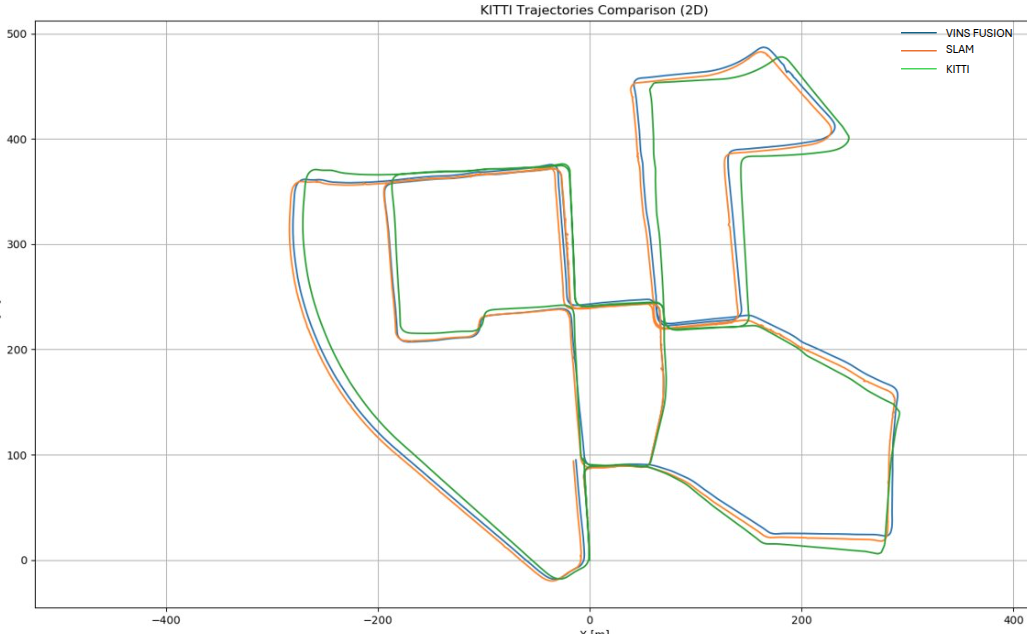

To address this challenge, we developed a method that integrates a visual-inertial SLAM pipeline, which performs well in most scenarios. However, since it often fails in featureless or textureless environments, we introduced a LiDAR fallback mechanism. By using a 2D LiDAR, we were able to keep costs low while still achieving reliable results.

For our SLAM system, we designed a visual-inertial frontend that leverages Structure-from-Motion (SfM) with ORB features and motion-only bundle adjustment. On the backend, we employ a LiDAR–visual full bundle adjustment to correct accumulated errors, along with a loop closure module based on a Bag-of-Words approach. For the navigation stack, we implemented a custom Hybrid A*-style planner optimized for faster computation, paired with a Pure Pursuit controller and a tailored decision tree for decision-making.